Face Generation

# can comment out after executing

!unzip processed_celeba_small.zip

data_dir = 'processed_celeba_small/'

"""

DON'T MODIFY ANYTHING IN THIS CELL

"""

import pickle as pkl

import matplotlib.pyplot as plt

import numpy as np

import problem_unittests as tests

#import helper

%matplotlib inline

Visualize the CelebA Data

The CelebA dataset contains over 200,000 celebrity images with annotations. Since you're going to be generating faces, you won't need the annotations, you'll only need the images. Note that these are color images with 3 color channels (RGB)#RGB_Images) each.

Pre-process and Load the Data

Since the project's main focus is on building the GANs, we've done some of the pre-processing for you. Each of the CelebA images has been cropped to remove parts of the image that don't include a face, then resized down to 64x64x3 NumPy images. This pre-processed dataset is a smaller subset of the very large CelebA data.

There are a few other steps that you'll need to transform this data and create a DataLoader.

Exercise:Complete the following get_dataloader function, such that it satisfies these requirements:

- Your images should be square, Tensor images of size

image_size x image_sizein the x and y dimension. - Your function should return a DataLoader that shuffles and batches these Tensor images.

ImageFolder

To create a dataset given a directory of images, it's recommended that you use PyTorch's ImageFolder wrapper, with a root directory processed_celeba_small/ and data transformation passed in.

# necessary imports

import torch

from torchvision import datasets

from torchvision import transforms

def get_dataloader(batch_size, image_size, data_dir='processed_celeba_small/'):

"""

Batch the neural network data using DataLoader

:param batch_size: The size of each batch; the number of images in a batch

:param img_size: The square size of the image data (x, y)

:param data_dir: Directory where image data is located

:return: DataLoader with batched data

"""

# TODO: Implement function and return a dataloader

transform = transforms.Compose([transforms.Resize(image_size),

transforms.ToTensor()])

dataset = datasets.ImageFolder(data_dir, transform)

return torch.utils.data.DataLoader(dataset, batch_size = batch_size, shuffle=True)

Create a DataLoader

Exercise: Create a DataLoader celeba_train_loader with appropriate hyperparameters.

Call the above function and create a dataloader to view images.

- You can decide on any reasonable

batch_sizeparameter - Your

image_sizemust be32. Resizing the data to a smaller size will make for faster training, while still creating convincing images of faces!

# Define function hyperparameters

batch_size = 40

img_size = 32

"""

DON'T MODIFY ANYTHING IN THIS CELL THAT IS BELOW THIS LINE

"""

# Call your function and get a dataloader

celeba_train_loader = get_dataloader(batch_size, img_size)

Next, you can view some images! You should seen square images of somewhat-centered faces.

Note: You'll need to convert the Tensor images into a NumPy type and transpose the dimensions to correctly display an image, suggested imshow code is below, but it may not be perfect.

# helper display function

def imshow(img):

npimg = img.numpy()

plt.imshow(np.transpose(npimg, (1, 2, 0)))

"""

DON'T MODIFY ANYTHING IN THIS CELL THAT IS BELOW THIS LINE

"""

# obtain one batch of training images

dataiter = iter(celeba_train_loader)

images, _ = dataiter.next() # _ for no labels

# plot the images in the batch, along with the corresponding labels

fig = plt.figure(figsize=(20, 4))

plot_size=20

for idx in np.arange(plot_size):

ax = fig.add_subplot(2, plot_size/2, idx+1, xticks=[], yticks=[])

imshow(images[idx])

Exercise: Pre-process your image data and scale it to a pixel range of -1 to 1

You need to do a bit of pre-processing; you know that the output of a tanh activated generator will contain pixel values in a range from -1 to 1, and so, we need to rescale our training images to a range of -1 to 1. (Right now, they are in a range from 0-1.)

# TODO: Complete the scale function

def scale(x, feature_range=(-1, 1)):

''' Scale takes in an image x and returns that image, scaled

with a feature_range of pixel values from -1 to 1.

This function assumes that the input x is already scaled from 0-1.'''

# assume x is scaled to (0, 1)

# scale to feature_range and return scaled x

min, max = feature_range

return x * (max - min) + min

"""

DON'T MODIFY ANYTHING IN THIS CELL THAT IS BELOW THIS LINE

"""

# check scaled range

# should be close to -1 to 1

img = images[0]

scaled_img = scale(img)

print('Min: ', scaled_img.min())

print('Max: ', scaled_img.max())

Define the Model

A GAN is comprised of two adversarial networks, a discriminator and a generator.

Discriminator

Your first task will be to define the discriminator. This is a convolutional classifier like you've built before, only without any maxpooling layers. To deal with this complex data, it's suggested you use a deep network with normalization. You are also allowed to create any helper functions that may be useful.

Exercise: Complete the Discriminator class

- The inputs to the discriminator are 32x32x3 tensor images

- The output should be a single value that will indicate whether a given image is real or fake

import torch.nn as nn

import torch.nn.functional as F

#conv function

def conv(in_channels, out_channels, kernel_size, stride=2, padding = 1, batch_norm= True):

layers = []

conv_layer = nn.Conv2d(in_channels=in_channels, out_channels=out_channels,kernel_size = kernel_size, stride = stride, padding = padding, bias = False)

layers.append(conv_layer)

if batch_norm:

layers.append(nn.BatchNorm2d(out_channels))

return nn.Sequential(*layers)

class Discriminator(nn.Module):

def __init__(self, conv_dim):

"""

Initialize the Discriminator Module

:param conv_dim: The depth of the first convolutional layer

"""

super(Discriminator, self).__init__()

# complete init function

self.conv_dim = conv_dim

self.conv1 = conv(3, conv_dim, 4, batch_norm=False) # x, y = 64 depth = 3

self.conv2 = conv(conv_dim, conv_dim * 2, 4) # x, y = 32 depth = 64

self.conv3 = conv(conv_dim * 2, conv_dim * 4, 4) # x, y = 16 depth = 128

self.fc = nn.Linear(conv_dim*4*4*4, 1)

self.out = nn.Sigmoid()

self.dropout = nn.Dropout(0.3)

def forward(self, x):

"""

Forward propagation of the neural network

:param x: The input to the neural network

:return: Discriminator logits; the output of the neural network

"""

# define feedforward behavior

x = F.leaky_relu(self.conv1(x), 0.2)

x = F.leaky_relu(self.conv2(x), 0.2)

x = F.leaky_relu(self.conv3(x), 0.2)

x = x.view(-1, self.conv_dim*4*4*4)

x = self.fc(x)

x = self.dropout(x)

return x

"""

DON'T MODIFY ANYTHING IN THIS CELL THAT IS BELOW THIS LINE

"""

tests.test_discriminator(Discriminator)

Generator

The generator should upsample an input and generate a new image of the same size as our training data 32x32x3. This should be mostly transpose convolutional layers with normalization applied to the outputs.

Exercise: Complete the Generator class

- The inputs to the generator are vectors of some length

z_size - The output should be a image of shape

32x32x3

def deconv(in_channels, out_channels, kernel_size, stride=2, padding=1, batch_norm=True):

layers = []

layers.append(nn.ConvTranspose2d(in_channels, out_channels, kernel_size, stride, padding, bias=False))

if batch_norm:

layers.append(nn.BatchNorm2d(out_channels))

return nn.Sequential(*layers)

class Generator(nn.Module):

def __init__(self, z_size, conv_dim):

"""

Initialize the Generator Module

:param z_size: The length of the input latent vector, z

:param conv_dim: The depth of the inputs to the *last* transpose convolutional layer

"""

super(Generator, self).__init__()

# complete init function

self.conv_dim = conv_dim

self.t_conv1 = deconv(conv_dim*4, conv_dim*2, 4 )

self.t_conv2 = deconv(conv_dim*2, conv_dim, 4)

self.t_conv3 = deconv(conv_dim, 3, 4, batch_norm=False)

self.fc = nn.Linear(z_size, conv_dim*4*4*4)

self.dropout = nn.Dropout(0.3)

def forward(self, x):

"""

Forward propagation of the neural network

:param x: The input to the neural network

:return: A 32x32x3 Tensor image as output

"""

# define feedforward behavior

x = self.fc(x)

x = self.dropout(x)

x = x.view(-1, self.conv_dim*4, 4, 4)

x = F.relu(self.t_conv1(x))

x = F.relu(self.t_conv2(x))

x = F.tanh(self.t_conv3(x))

return x

"""

DON'T MODIFY ANYTHING IN THIS CELL THAT IS BELOW THIS LINE

"""

tests.test_generator(Generator)

Initialize the weights of your networks

To help your models converge, you should initialize the weights of the convolutional and linear layers in your model. From reading the original DCGAN paper, they say:

All weights were initialized from a zero-centered Normal distribution with standard deviation 0.02.

So, your next task will be to define a weight initialization function that does just this!

You can refer back to the lesson on weight initialization or even consult existing model code, such as that from the networks.py file in CycleGAN Github repository to help you complete this function.

Exercise: Complete the weight initialization function

- This should initialize only convolutional and linear layers

- Initialize the weights to a normal distribution, centered around 0, with a standard deviation of 0.02.

- The bias terms, if they exist, may be left alone or set to 0.

def weights_init_normal(m):

"""

Applies initial weights to certain layers in a model .

The weights are taken from a normal distribution

with mean = 0, std dev = 0.02.

:param m: A module or layer in a network

"""

# classname will be something like:

# `Conv`, `BatchNorm2d`, `Linear`, etc.

classname = m.__class__.__name__

# TODO: Apply initial weights to convolutional and linear layers

if hasattr(m, 'weight') and (classname.find('Conv') != -1 or classname.find('Linear') != -1):

nn.init.normal_(m.weight.data, 0.0, 0.02)

if hasattr(m.bias, 'data'):

nn.init.constant_(m.bias.data, 0.0)

"""

DON'T MODIFY ANYTHING IN THIS CELL THAT IS BELOW THIS LINE

"""

def build_network(d_conv_dim, g_conv_dim, z_size):

# define discriminator and generator

D = Discriminator(d_conv_dim)

G = Generator(z_size=z_size, conv_dim=g_conv_dim)

# initialize model weights

D.apply(weights_init_normal)

G.apply(weights_init_normal)

print(D)

print()

print(G)

return D, G

# Define model hyperparams

d_conv_dim = 32

g_conv_dim = 32

z_size = 100

"""

DON'T MODIFY ANYTHING IN THIS CELL THAT IS BELOW THIS LINE

"""

D, G = build_network(d_conv_dim, g_conv_dim, z_size)

"""

DON'T MODIFY ANYTHING IN THIS CELL

"""

import torch

# Check for a GPU

train_on_gpu = torch.cuda.is_available()

if not train_on_gpu:

print('No GPU found. Please use a GPU to train your neural network.')

else:

print('Training on GPU!')

Discriminator and Generator Losses

Now we need to calculate the losses for both types of adversarial networks.

Discriminator Losses

- For the discriminator, the total loss is the sum of the losses for real and fake images,

d_loss = d_real_loss + d_fake_loss.- Remember that we want the discriminator to output 1 for real images and 0 for fake images, so we need to set up the losses to reflect that.

Generator Loss

The generator loss will look similar only with flipped labels. The generator's goal is to get the discriminator to think its generated images are real.

Exercise:Complete real and fake loss functions

You may choose to use either cross entropy or a least squares error loss to complete the following real_loss and fake_loss functions.

def real_loss(D_out):

'''Calculates how close discriminator outputs are to being real.

param, D_out: discriminator logits

return: real loss'''

batch_size = D_out.size(0)

labels = torch.ones(batch_size)

if train_on_gpu:

labels = labels.cuda()

criterion = nn.BCEWithLogitsLoss()

loss = criterion(D_out.squeeze(), labels)

return loss

def fake_loss(D_out):

'''Calculates how close discriminator outputs are to being fake.

param, D_out: discriminator logits

return: fake loss'''

batch_size = D_out.size(0)

labels = torch.zeros(batch_size)

if train_on_gpu:

labels = labels.cuda()

criterion = nn.BCEWithLogitsLoss()

loss = criterion(D_out.squeeze(), labels)

return loss

import torch.optim as optim

# Create optimizers for the discriminator D and generator G

d_optimizer = optim.Adam(D.parameters(), lr=0.0005, betas=(0.5, 0.999))

g_optimizer = optim.Adam(G.parameters(), lr=0.0005, betas=(0.5, 0.999))

Training

Training will involve alternating between training the discriminator and the generator. You'll use your functions real_loss and fake_loss to help you calculate the discriminator losses.

- You should train the discriminator by alternating on real and fake images

- Then the generator, which tries to trick the discriminator and should have an opposing loss function

Saving Samples

You've been given some code to print out some loss statistics and save some generated "fake" samples.

def train(D, G, n_epochs, print_every=50):

'''Trains adversarial networks for some number of epochs

param, D: the discriminator network

param, G: the generator network

param, n_epochs: number of epochs to train for

param, print_every: when to print and record the models' losses

return: D and G losses'''

# move models to GPU

if train_on_gpu:

D.cuda()

G.cuda()

# keep track of loss and generated, "fake" samples

samples = []

losses = []

# Get some fixed data for sampling. These are images that are held

# constant throughout training, and allow us to inspect the model's performance

sample_size=16

fixed_z = np.random.uniform(-1, 1, size=(sample_size, z_size))

fixed_z = torch.from_numpy(fixed_z).float()

# move z to GPU if available

if train_on_gpu:

fixed_z = fixed_z.cuda()

# epoch training loop

for epoch in range(n_epochs):

# batch training loop

for batch_i, (real_images, _) in enumerate(celeba_train_loader):

batch_size = real_images.size(0)

real_images = scale(real_images)

# ===============================================

# YOUR CODE HERE: TRAIN THE NETWORKS

# ===============================================

# 1. Train the discriminator on real and fake images

if train_on_gpu:

real_images = real_images.cuda()

d_optimizer.zero_grad()

D_real = D(real_images)

d_real_loss = real_loss(D_real)

z_flex = np.random.uniform(-1, 1, size=(batch_size, z_size))

z_flex = torch.from_numpy(z_flex).float()

if train_on_gpu:

z_flex = z_flex.cuda()

fake_images = G(z_flex)

D_fake = D(fake_images)

d_fake_loss = fake_loss(D_fake)

d_loss = d_real_loss + d_fake_loss

d_loss.backward()

d_optimizer.step()

# 2. Train the generator with an adversarial loss

g_optimizer.zero_grad()

z_flex = np.random.uniform(-1, 1, size=(batch_size, z_size))

z_flex = torch.from_numpy(z_flex).float()

if train_on_gpu:

z_flex = z_flex.cuda()

fake_images = G(z_flex)

D_fake = D(fake_images)

g_loss = real_loss(D_fake)

g_loss.backward()

g_optimizer.step()

# ===============================================

# END OF YOUR CODE

# ===============================================

# Print some loss stats

if batch_i % print_every == 0:

# append discriminator loss and generator loss

losses.append((d_loss.item(), g_loss.item()))

# print discriminator and generator loss

print('Epoch [{:5d}/{:5d}] | d_loss: {:6.4f} | g_loss: {:6.4f}'.format(

epoch+1, n_epochs, d_loss.item(), g_loss.item()))

## AFTER EACH EPOCH##

# this code assumes your generator is named G, feel free to change the name

# generate and save sample, fake images

G.eval() # for generating samples

samples_z = G(fixed_z)

samples.append(samples_z)

G.train() # back to training mode

# Save training generator samples

with open('train_samples.pkl', 'wb') as f:

pkl.dump(samples, f)

# finally return losses

return losses

Set your number of training epochs and train your GAN!

# set number of epochs

n_epochs = 4

"""

DON'T MODIFY ANYTHING IN THIS CELL

"""

# call training function

losses = train(D, G, n_epochs=n_epochs)

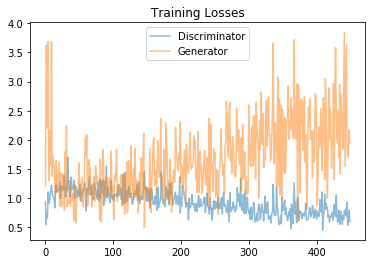

fig, ax = plt.subplots()

losses = np.array(losses)

plt.plot(losses.T[0], label='Discriminator', alpha=0.5)

plt.plot(losses.T[1], label='Generator', alpha=0.5)

plt.title("Training Losses")

plt.legend()

# helper function for viewing a list of passed in sample images

def view_samples(epoch, samples):

fig, axes = plt.subplots(figsize=(16,4), nrows=2, ncols=8, sharey=True, sharex=True)

for ax, img in zip(axes.flatten(), samples[epoch]):

img = img.detach().cpu().numpy()

img = np.transpose(img, (1, 2, 0))

img = ((img + 1)*255 / (2)).astype(np.uint8)

ax.xaxis.set_visible(False)

ax.yaxis.set_visible(False)

im = ax.imshow(img.reshape((32,32,3)))

# Load samples from generator, taken while training

with open('train_samples.pkl', 'rb') as f:

samples = pkl.load(f)

_ = view_samples(-1, samples)

Question: What do you notice about your generated samples and how might you improve this model?

When you answer this question, consider the following factors:

- The dataset is biased; it is made of "celebrity" faces that are mostly white

- Model size; larger models have the opportunity to learn more features in a data feature space

- Optimization strategy; optimizers and number of epochs affect your final result

Answer: (Write your answer in this cell)

The dataset is biased I Think More faces might help while Training GAN for better results to generate a new type of faces.

I trained the model for 10 epochs with different optimizer and network size. and i got learning rate value as 0.0002

I Used Adam, I Think it is the best choice for GAN's

Reducing the epochs can produce the best result.

The images are of very low resulution, which makes it harder to add more CNN layers